As enterprises navigate the increasingly complex landscape of modern computing, the challenge of orchestrating workloads across diverse environments has never been more pressing. From bustling data centres to remote edge locations, organisations are seeking solutions that bridge the gap between on-premises infrastructure and public cloud platforms whilst maintaining control, security and operational efficiency. This journey from edge to cloud represents a fundamental shift in how businesses deploy and manage their containerised applications at scale.

The Evolution of Enterprise Infrastructure: Understanding Edge-to-Cloud Architecture

The traditional boundaries of enterprise IT have undergone a remarkable transformation in recent years. Where once organisations relied primarily on centralised data centres, today’s infrastructure sprawls across multiple environments, each serving distinct purposes and offering unique capabilities. This distributed approach reflects a strategic response to evolving business requirements, regulatory constraints and the relentless pursuit of competitive advantage through technology.

Defining the Modern Edge Computing Landscape

Edge computing has emerged as a critical component of enterprise architecture, fundamentally altering how organisations process and analyse data. Rather than shuttling information back to distant data centres for processing, edge infrastructure brings computational power closer to where data originates, enabling near-instantaneous insights and rapid responses to changing conditions. The growth trajectory of this sector tells a compelling story: by 2023, more than half of new enterprise IT deployments occurred at the edge rather than in traditional data centres. The proliferation of connected devices underscores this shift, with projections indicating that IoT devices will reach 55.44 billion units by 2025, collectively generating approximately 73 zettabytes of data.

The adoption of containerisation technology has become central to enabling this edge revolution. Industry forecasts suggest that by 2028, roughly 80 percent of bespoke software deployed at edge locations will utilise containers, a dramatic increase from merely 10 percent in 2023. The Cloud Native Computing Foundation reports that 93 percent of organisations are either actively using containers in production environments or planning to do so. Kubernetes has become the de facto standard for container orchestration, with 96 percent of organisations using or evaluating the platform, up considerably from 83 percent in 2020 and 78 percent in 2019. Current estimates suggest over 5.6 million developers worldwide are working with Kubernetes, reflecting its widespread acceptance across the technology industry.

Why Enterprises Are Adopting Hybrid Cloud Models

The motivations driving enterprises towards hybrid and multicloud architectures are both varied and pragmatic. Research indicates that 60 percent of organisations pursue multiple infrastructure environments specifically to minimise cloud risk, recognising that over-reliance on a single provider or model creates vulnerability. A further 56 percent cite the need to repatriate cloud workloads back to on-premises infrastructure, often driven by cost considerations, performance requirements or regulatory compliance. An equal proportion, also 56 percent, point to artificial intelligence initiatives as a key driver, as these projects frequently demand specialised infrastructure that may not be readily available or cost-effective in public cloud environments alone.

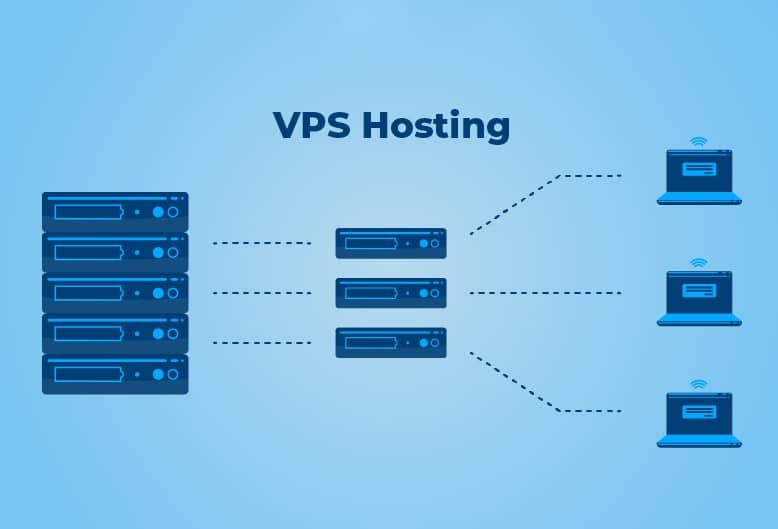

This diversity of motivations translates into a complex operational reality. Organisations typically juggle five to six different infrastructure environments for their Kubernetes deployments, spanning public cloud platforms, edge locations, virtualised data centres, bare metal facilities and various hybrid configurations. Each environment presents its own advantages and constraints, influencing what organisations can realistically deploy and how they must approach management. The challenge lies not merely in operating these disparate environments but in maintaining consistency, security and visibility across them all. Services such as the managed rancher OVHcloud offering exemplify the industry’s response to this complexity, providing tooling designed to unify management across heterogeneous infrastructure landscapes.

Kubernetes Management Challenges Across Distributed Environments

Whilst Kubernetes offers powerful capabilities for container orchestration, deploying it at enterprise scale across distributed environments introduces significant operational complexities. The very flexibility that makes Kubernetes attractive also creates management challenges, particularly when organisations must maintain multiple clusters across diverse infrastructure types whilst ensuring consistency, security and compliance.

Complexity of Multi-Cluster Orchestration

Managing numerous Kubernetes clusters across different environments demands sophisticated coordination mechanisms. Organisations require centralised visibility into their entire container estate whilst maintaining the ability to enforce policies consistently regardless of where workloads actually run. This challenge becomes particularly acute at edge locations, where infrastructure often operates with limited local resources and minimal on-site technical support. Industry analysts observe that Kubernetes deployments at the edge necessitate new tools and operational approaches, especially when managing numerous clusters on hardware with constrained capabilities.

The operational burden extends beyond mere cluster provisioning to encompass ongoing lifecycle management, including upgrades, security patching and capacity planning. Solutions have emerged to address these challenges through automation and centralised control planes. For instance, declarative automated installers accelerate time to production by streamlining initial deployment processes. Cluster autoscaling capabilities adjust infrastructure capacity dynamically in response to workload demands, whilst lifecycle automation ensures consistent upgrades and security policy enforcement across all managed clusters. Granular cost control mechanisms, such as Kubecost integration, provide visibility into resource consumption patterns, enabling organisations to optimise spending across their container infrastructure.

The diversity of deployment options reflects the varied needs of enterprise environments. Organisations exclusively operating in public cloud might opt for managed Kubernetes services built atop platforms such as Azure Kubernetes Service or Amazon Elastic Kubernetes Service. Those pursuing hybrid strategies often prefer solutions offering greater control over the underlying operating system, whilst organisations requiring full-stack consistency across environments may choose integrated approaches that extend from on-premises infrastructure through to public cloud. Centralised authorisation mechanisms enable secure access using existing identity services, whilst service mesh integration provides advanced networking capabilities for sophisticated application architectures.

Security and Compliance Considerations at Scale

Security remains paramount when managing Kubernetes across distributed environments, particularly given the sensitive nature of many enterprise workloads and stringent regulatory requirements governing data handling. The distributed nature of edge-to-cloud architectures multiplies potential attack surfaces, demanding comprehensive security frameworks that extend from hardware through to application layers. Leading approaches advocate for three fundamental principles: zero limits in handling vast numbers of edge compute nodes, zero touch deployment to simplify installations without requiring IT specialists on-site, and zero trust security offering layered protection throughout the infrastructure stack.

Compliance certifications provide essential assurance that infrastructure meets regulatory standards across jurisdictions and industries. Comprehensive security frameworks encompass multiple certifications including GDPR for data protection, SecNumCloud for French government cloud services, HDS for healthcare data hosting, HIPAA for healthcare information privacy, PCI DSS for payment card security, and various ISO standards covering information security management, service management and business continuity. These certifications enable organisations to deploy workloads confidently across distributed infrastructure whilst maintaining regulatory compliance.

Advanced management platforms incorporate features specifically designed to address security and governance at scale. Precision role-based access control enables granular permission management, ensuring users access only appropriate resources within the distributed environment. Policy compliance visualisation tools provide real-time insights into adherence to organisational standards, highlighting non-compliant resources for remediation. Automated discovery and import capabilities scan cloud environments, identifying resources such as managed OpenShift clusters and integrating them automatically into centralised management hubs. Global search functionality delivers unified visibility across multiple management hubs, enabling administrators to locate resources and assess security posture across the entire estate. These capabilities prove essential for enterprises seeking to maintain control and visibility as their Kubernetes deployments scale across increasingly diverse and distributed infrastructure environments.